AI’s Environmental Impact: A Critical Review

Artificial intelligence has revolutionized how we process information, automate tasks, and make decisions across industries. Yet behind every ChatGPT response, machine learning model, and AI-powered system lies a substantial environmental cost that remains largely invisible to end users. The question of how is ChatGPT bad for the environment extends far beyond a single platform—it encompasses the entire ecosystem of artificial intelligence infrastructure, energy consumption, water usage, and resource extraction required to train and operate these systems at scale.

Large language models like ChatGPT demand extraordinary computational resources. Training a single state-of-the-art AI model can consume as much electricity as hundreds of households use in a year. When multiplied across the billions of queries processed daily, the cumulative environmental burden becomes staggering. This article examines the multifaceted environmental implications of AI systems, from energy consumption and carbon emissions to water usage, electronic waste, and the broader systemic impacts on our planet’s ecosystems and climate.

Energy Consumption and Carbon Emissions

The energy footprint of modern AI systems represents one of the most significant environmental concerns in the technology sector. Training large language models requires processing massive datasets through complex neural networks, consuming gigawatts of electricity continuously over weeks or months. Research indicates that training GPT-3, a predecessor to current models, consumed approximately 1,287 megawatt-hours of electricity—equivalent to the annual energy consumption of 120 average American households.

The carbon intensity of this energy consumption depends critically on the energy grid’s composition. When AI training facilities operate in regions powered primarily by fossil fuels, the carbon emissions multiply substantially. A single training run can generate hundreds of metric tons of CO2 equivalent emissions. Beyond training, the inference phase—when users interact with deployed AI systems—creates ongoing energy demands. ChatGPT processes millions of queries daily, each requiring computational resources and contributing to cumulative emissions.

Understanding human environment interaction through the lens of AI reveals how technological advancement creates new environmental pressures. The expansion of AI capabilities correlates directly with increased energy demand. According to research from the International Energy Agency, data centers globally account for approximately 1-2% of worldwide electricity consumption, with AI workloads representing the fastest-growing segment. Projections suggest that without intervention, AI-related energy consumption could double within five years.

The rebound effect complicates this picture further. As AI becomes more efficient and accessible, usage increases exponentially, potentially offsetting efficiency gains. This phenomenon, known as Jevons paradox, suggests that technological improvements may actually increase total resource consumption rather than decrease it. The economics of AI deployment incentivize continuous scaling, creating a system where environmental costs scale alongside capabilities.

Comparative analysis reveals troubling trends. A single AI model training session can emit more CO2 than flying a person from New York to San Francisco 1,500 times. When considering that major technology companies train hundreds of models annually, the aggregate impact becomes profound. The lack of standardized measurement and reporting frameworks means many environmental costs remain uncounted in corporate sustainability reports.

Water Usage in AI Infrastructure

An often-overlooked environmental consequence of AI systems involves massive water consumption. Data centers require enormous quantities of water for cooling systems that prevent overheating of servers. This demand becomes particularly acute in regions where water scarcity already constrains development and agricultural productivity. Major technology companies have begun disclosing water usage metrics, revealing that some data centers consume millions of gallons daily.

The water intensity of AI infrastructure varies by location and cooling technology employed. Traditional cooling systems can require 15-50 liters of water per megawatt-hour of electricity generated. Advanced cooling techniques reduce this ratio, but still demand substantial quantities. In water-stressed regions, data center expansion directly competes with agricultural irrigation, municipal water supplies, and ecosystem needs. Communities hosting data centers have reported declining groundwater levels and stressed aquifer systems.

The quality dimension adds complexity to water impact assessment. Cooling water often becomes contaminated with chemicals and thermal pollution, affecting downstream ecosystems and water availability for other uses. When returned to natural systems, heated water disrupts aquatic habitats and reduces oxygen availability for fish and other organisms. This represents a form of biotic environment degradation that cascades through food webs and ecosystem services.

Geographic concentration of data centers amplifies water stress in specific regions. Arizona, Texas, and other water-scarce states host significant AI infrastructure, intensifying competition for limited water resources. International data center locations in water-constrained areas of Europe, Asia, and Africa create similar pressures. The World Bank has identified water scarcity as a critical constraint on development in numerous regions, yet AI infrastructure expansion proceeds with limited consideration of these systemic pressures.

Resource Extraction and Electronic Waste

The physical hardware underlying AI systems requires extraction of rare earth elements, precious metals, and other finite resources. Semiconductor manufacturing demands cobalt, lithium, tantalum, and tungsten—minerals whose extraction involves significant environmental and social costs. Mining operations destroy habitats, generate toxic waste, and often occur in ecologically sensitive regions with weak environmental protections.

The supply chain for AI hardware extends globally, with extraction concentrated in developing nations and manufacturing distributed across multiple countries. This fragmentation obscures environmental accountability. Cobalt mining in the Democratic Republic of Congo, for instance, generates severe water pollution and respiratory disease in local communities, yet remains invisible in most environmental impact assessments of AI systems.

Electronic waste represents the end-of-life phase of this resource cycle. As AI systems require constant hardware upgrades to maintain competitive performance, older equipment enters waste streams. E-waste contains hazardous materials including lead, mercury, and cadmium that contaminate soil and groundwater when improperly disposed. The rapid obsolescence of AI hardware accelerates this waste generation relative to other technology sectors.

The circular economy concept offers theoretical solutions but faces implementation barriers. Recycling rates for electronic waste remain below 20% globally, with most material ending up in landfills or informal recycling operations in developing countries. These informal sectors expose workers to toxic materials while generating minimal recovery value. Scaling circular approaches requires fundamental changes to hardware design, supply chain structures, and economic incentives—transformations unlikely without regulatory intervention.

Related to broader sustainability concerns, understanding how to reduce carbon footprint must include supply chain emissions from hardware production. Manufacturing a single semiconductor chip generates emissions equivalent to operating it for years. The cumulative effect of producing billions of chips annually for AI infrastructure creates substantial but often-uncounted emissions.

Ecosystem and Biodiversity Impacts

The environmental consequences of AI infrastructure extend beyond direct resource consumption to affect ecosystem integrity and biodiversity. Data center construction requires land clearing that destroys habitats and fragments ecosystems. The thermal and water pollution from cooling systems degrades aquatic ecosystems, affecting species reproduction, migration patterns, and population viability.

Electromagnetic radiation from data center operations and associated infrastructure creates additional stressors on wildlife. Research on electromagnetic effects on animal navigation and behavior remains incomplete, but evidence suggests impacts on migratory birds, insects, and marine mammals. The cumulative effect of expanding data center networks creates a landscape increasingly hostile to biodiversity.

Climate change induced by AI-related emissions compounds ecosystem stress. Rising temperatures alter precipitation patterns, extend growing seasons unpredictably, and shift species ranges. These changes cascade through food webs and ecological communities. Species with narrow environmental tolerances face extinction risks as their habitats shift beyond suitable conditions. Keystone species supporting entire ecosystems become vulnerable, threatening ecosystem services humanity depends upon.

The concept of renewable energy for homes and infrastructure offers partial solutions but cannot fully address ecosystem impacts. Even renewable energy development requires habitat conversion and generates environmental costs. The scale of energy needed for AI systems exceeds practical renewable capacity in most regions, necessitating either dramatic energy efficiency improvements or reduced AI deployment.

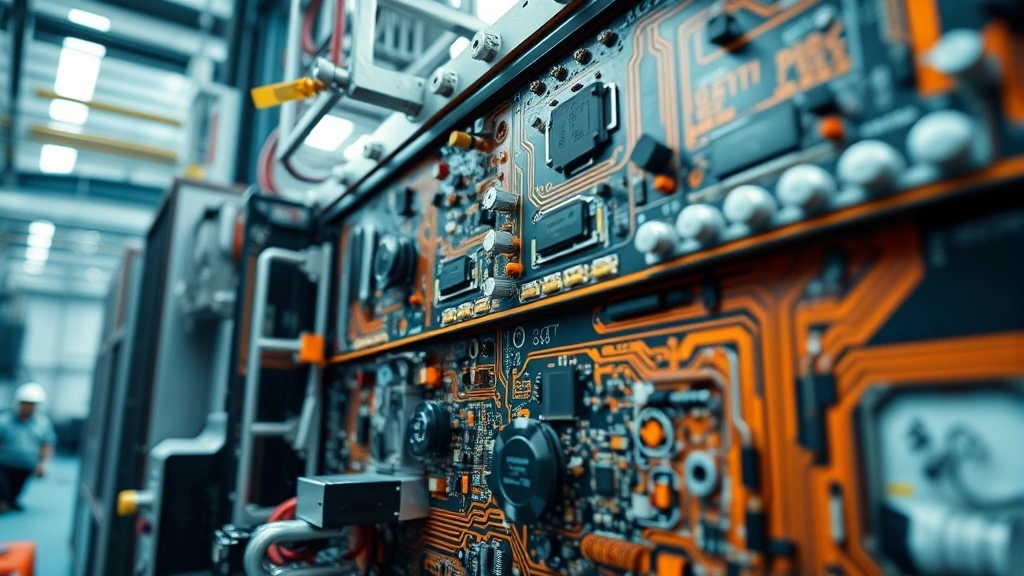

Data Center Operations and Cooling Systems

Data centers represent the physical manifestation of AI’s environmental impact. These facilities operate continuously, consuming massive amounts of electricity to power servers and cooling systems. The power usage effectiveness (PUE) metric measures efficiency—the ratio of total facility power to IT equipment power. While leading data centers achieve PUE ratios near 1.1, industry averages exceed 1.5, meaning substantial energy goes to cooling rather than computation.

Cooling technology selection significantly influences environmental outcomes. Air cooling, the traditional approach, requires mechanical systems consuming additional electricity. Liquid cooling reduces energy requirements but introduces chemical and thermal pollution risks. Immersion cooling in dielectric fluids offers further efficiency gains but creates new environmental challenges if fluids leak into surrounding ecosystems.

Location decisions for data centers reflect optimization for cost and energy availability rather than environmental impact. Proximity to cheap electricity sources—often fossil fuel plants or hydroelectric facilities—drives siting decisions. This creates perverse incentives where environmental costs become externalized rather than incorporated into site selection. Water-rich regions attract data centers precisely because water availability enables cheap cooling, but this competition for water intensifies ecosystem stress.

The geographic distribution of AI infrastructure creates uneven environmental burdens. Developing nations hosting data centers for wealthy nations’ AI services bear disproportionate environmental costs while receiving minimal economic benefits. This represents a form of environmental colonialism where ecosystem degradation in one region enables consumption patterns in another.

Mitigation Strategies and Future Directions

Addressing AI’s environmental impact requires multifaceted approaches spanning technology, policy, and economic structures. Energy efficiency improvements in hardware design and algorithms can reduce computational requirements. Techniques like model compression, knowledge distillation, and specialized processors lower energy per inference. However, efficiency gains historically trigger increased usage, potentially negating environmental benefits.

Transitioning data centers to renewable energy sources represents another critical pathway. Technology companies have made renewable energy commitments, but actual implementation lags pledges. Renewable energy development itself creates environmental costs through land use and habitat disruption. Balancing AI energy demands with renewable capacity constraints requires either dramatic efficiency improvements or restraint in AI deployment growth.

Policy interventions can internalize environmental costs into AI development and deployment. Carbon pricing, environmental impact assessments, and mandatory sustainability reporting create incentives for efficiency. Regulations on data center siting, water usage, and waste management establish minimum environmental standards. However, regulatory approaches face challenges from industry lobbying and competitive pressure favoring jurisdictions with minimal environmental requirements.

Systemic approaches question whether current AI deployment trajectories align with climate and sustainability objectives. The International Energy Agency and UNEP have highlighted tensions between AI expansion and climate goals. Some researchers argue that AI’s environmental costs exceed its societal benefits in many applications, suggesting selective deployment focusing on high-value uses.

Circular economy approaches to hardware manufacturing, recycling, and reuse can reduce resource extraction and waste. Extended producer responsibility policies hold manufacturers accountable for end-of-life management. Designing hardware for longevity and repairability rather than obsolescence reduces replacement rates and associated environmental costs.

Transparency and accountability mechanisms enhance environmental governance. Standardized environmental impact reporting frameworks enable comparison across AI systems and companies. Third-party verification ensures accuracy and comparability. Public disclosure of environmental costs supports informed decision-making by consumers, investors, and policymakers.

Broader sustainability considerations intersect with AI environmental impact. Sustainable fashion brands and other sectors demonstrate that environmental responsibility can align with business success when properly incentivized. Similar models could guide AI development toward sustainability.

Research into alternative computational paradigms offers longer-term possibilities. Neuromorphic computing, quantum computing, and other approaches might ultimately reduce energy requirements for AI tasks. However, these remain largely theoretical and may introduce new environmental challenges during development and deployment phases.

FAQ

How much energy does ChatGPT specifically consume?

ChatGPT’s exact energy consumption remains proprietary, but estimates suggest each query consumes 0.3 watt-hours of electricity. With millions of daily queries, cumulative consumption reaches megawatt-hours daily. The inference phase represents smaller per-query energy than training, but aggregates substantially across billions of interactions.

Can renewable energy solve AI’s environmental problems?

Renewable energy represents necessary but insufficient solutions. While transitioning to renewables reduces carbon emissions, it doesn’t address water consumption, resource extraction, or ecosystem impacts. Additionally, renewable capacity constraints limit how much AI deployment can expand without competing with other electricity demands.

Why don’t AI companies use more efficient models?

Market pressures incentivize capability expansion over efficiency. Larger models achieve better performance on benchmarks, attracting users and investment. Economic incentives favor deployment at scale over optimization for environmental impact. Without regulatory or consumer pressure, efficiency improvements remain secondary priorities.

What’s the environmental cost of training versus using AI models?

Training represents the largest single environmental burden, consuming substantial energy over concentrated periods. However, inference—using trained models—creates ongoing cumulative impacts across billions of interactions. Over a model’s lifetime, inference typically exceeds training energy requirements when deployed at scale.

How does AI’s environmental impact compare to other industries?

AI data center energy consumption rivals aviation and shipping sectors. However, AI represents a rapidly growing source of emissions, while other industries face declining growth. The trajectory matters—AI’s environmental footprint is expanding while society pursues climate reduction goals.

The environmental implications of artificial intelligence demand serious consideration as deployment scales globally. From energy consumption and carbon emissions to water usage, resource extraction, and ecosystem degradation, AI systems create multifaceted environmental pressures. While technological solutions exist for improving efficiency, fundamental tensions between AI expansion and climate sustainability goals require honest assessment. World Bank analyses and research from Nature journal increasingly recognize these trade-offs. Policymakers, technology companies, and society must collectively determine whether current AI deployment trajectories align with environmental and climate objectives, or whether selective deployment and efficiency prioritization should guide development. The answer will fundamentally shape both technological futures and environmental outcomes for decades to come.